漏洞描述

multiprocessing包是Python中的多进程管理包。其Manager类提供了一种创建共享数据的方法,从而可以在不同进程中共享,甚至可以通过网络跨机器共享数据。Manager维护一个用于管理共享对象的服务。其他进程可以通过代理访问这些共享对象。

此漏洞的成因为:multiprocessing模块中的Manager和ManagerBase的默认序列化参数是pickle,默认送恶意payload注入代码、触发此漏洞。成功利用此漏洞的攻击者可在受害主机上执行任意代码。 与主进程与子进程交互之间,利用pickle序列化进行信息传递,如果能在子进程进行时,能够恶意控制传入主进程的数据,即可传入恶意的pickle数据,造成pickle反序列化漏洞

漏洞复现

poc已公开

server.py

1 | from multiprocessing.managers import BaseManager |

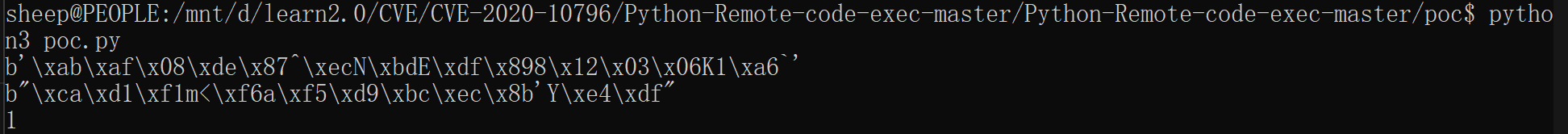

poc.py

1 | import os |

cool~,成功执行命令

服务端一定要注意加上

1 | authkey=b'' |

否则执行不了

漏洞原理分析

官方文档理解multiprocessing库

Pool类

在这个库里面,通过建立Pool对象,然后调用它的 start()去生成进程池

实现

1 | def __init__(self, processes=None, initializer=None, initargs=(), |

关键我们跟进源码的map函数,看它都做了什么

1 | return self._map_async(func, iterable, mapstar, chunksize).get() |

继续跟进

1 | self._check_running() |

主要关注一下_guarded_task_generation函数,跟进实现

1 | def _guarded_task_generation(self, result_job, func, iterable): |

核心目的:把 iterable 中的每一项 打包成任务元组,供 worker 执行。

把任务放入 self._taskqueue(父进程内部线程队列)

然后跟进函数实现

主要是两个主要的

Pool._handle_tasks

Pool._handle_results

第一个

1 | def _handle_tasks(taskqueue, put, outqueue, pool, cache): |

这个put方法是我们传入的,实现在这里

1 | def _setup_queues(self): |

这个队列底层是 管道 (pipe) 实现的,所以它最终还是对应文件描述符(fd)

而这个send方法,就是把对象进行序列化进行写入,recv方法就是把对象反序列化进行读取

1 | 返回的两个连接对象 Pipe() 表示管道的两端。每个连接对象都有 send() 和 recv() 方法(相互之间的)。请注意,如果两个进程(或线程)同时尝试读取或写入管道的 同一 端,则管道中的数据可能会损坏。当然,在不同进程中同时使用管道的不同端的情况下不存在损坏的风险。 |

然后我们看第二个函数

1 | def _handle_results(outqueue, get, cache): |

同上,父进程进行写入读取

还有一个函数

1 | while True: |

即子进程的相关读取发送操作

重点理解

一般来讲,我们通过map方法将对象放进主进程队列,然后经过

1 | 主进程将对象pickle序列化->主进程的写入端(fd)->子进程从父进程的写入端读取->反序列化执行->序列化写入父进程的读取端 |

而fd是通过os.pipe()创建的

一般这个过程没有问题,不存在漏洞

但是如果在子进程的时候,我们可以实现覆盖文件或者向/proc/self/fd/x写入数据的时候,主进程直接读取进行反序列化,就会存在漏洞

但是有一点需要注意,使用write是无法写入的

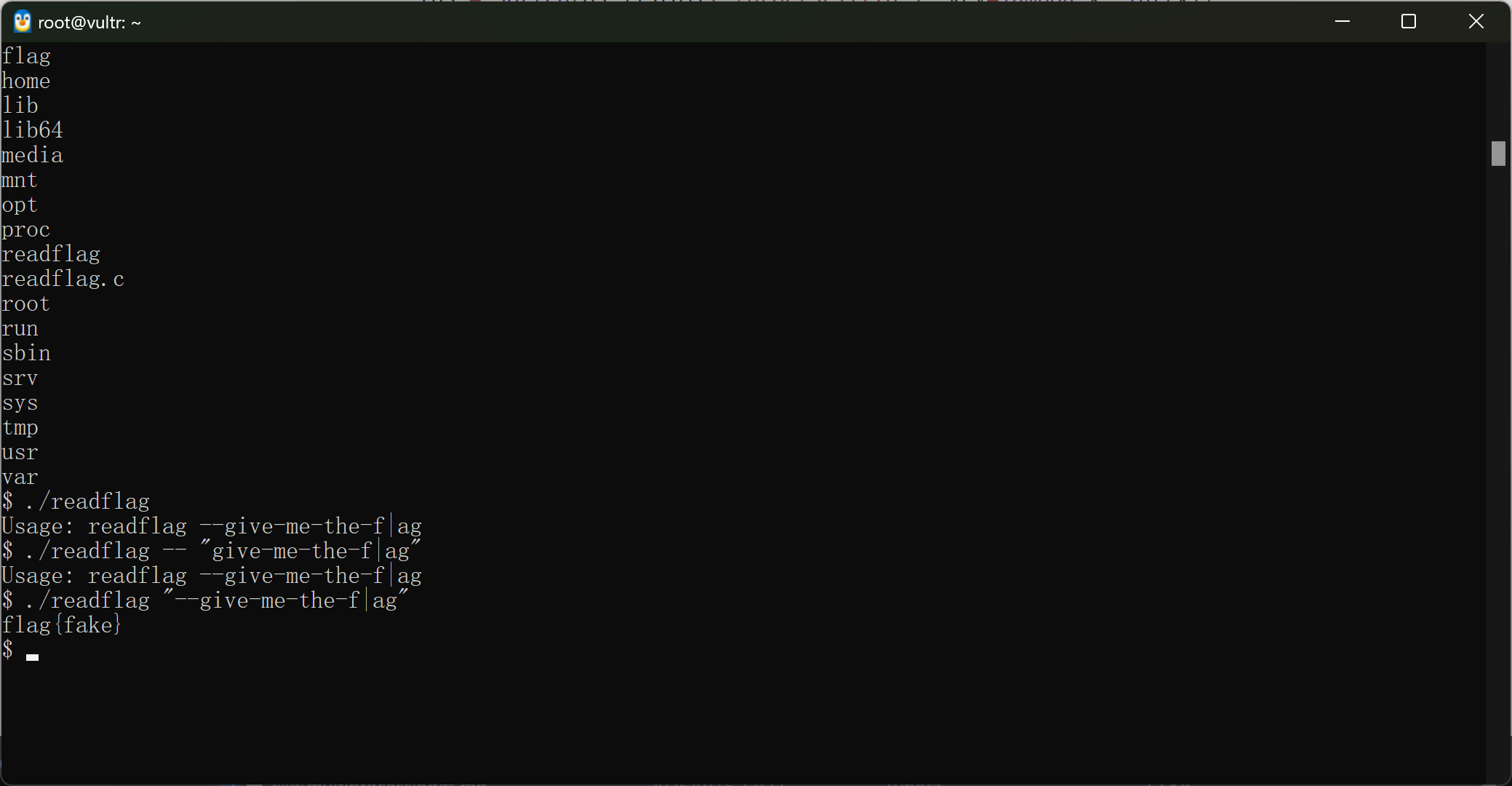

如那道Hitcon的题就是如此

实际路径的确定有点猜的意味()

本地起docker的话可以看pid对应查fd

ls -al /proc/7/fd

1 | def list_fds_brief(label): |

有意思的是,对这个CVE理解加深,重心得看向Image.save方法了,更底层?还有哪些更底层的函数呢?

除了这个问题,分析poc的实现形式

不过本次更多关注CVE,就点到为止了

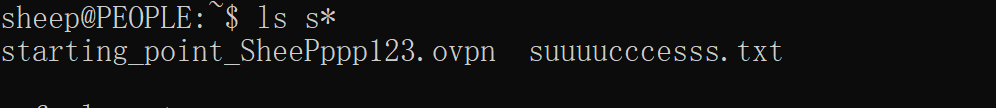

后记

花了几乎半天的时间想出一道类似的题目,发现实际根本反序列化不了,看logs也没进要key的时候,百思不得其解的时候,不断给代码减负,问题还是存在,最后发现因为自己单单想考cve,又天真认为本质上就是把文件覆盖进文件描述符就行了,用的open,但是实际不成功,才发现,得用更为底层的img.save

比如

1 | Process ForkPoolWorker-2: |

说明反序列化成功~

基本试成功的fd都是6或者10,不行重开环境试都行,本来有点看运的,不过确实是能弹